How do college students use digital flashcards during self-regulated learning?

Inez Zung

a,b

, Megan N. Imundo

a

and Steven C. Pan

a,c

a

Department of Psychology, University of California, Los Angeles, CA, USA;

b

Department of Psychology, University of California, San Diego,

CA, USA;

c

Department of Psychology, National University of Singapore, Singapore, Singapore

ABSTRACT

Over the past two decades, digital flashcards – that is, computer programmes, smartphone

apps, and online services that mimic, and potentially improve upon, the capabilities of

traditional paper flashcards – have grown in variety and popularity. Many digital flashcard

platforms allow learners to make or use flashcards from a variety of sources and customise

the way in which flashcards are used. Yet relatively little is known about why and how

students actually use digital flashcards during self-regulated learning, and whether such uses

are supported by research from the science of learning. To address these questions, we

conducted a large survey of undergraduate students (n=901) at a major U.S. university. The

survey revealed insights into the popularity, acquisition, and usage of digital flashcards,

beliefs about how digital flashcards are to be used during self-regulated learning, and

differences in uses of paper versus digital flashcards, all of which have implications for the

optimisation of student learning. Overall, our results suggest that college students

commonly use digital flashcards in a manner that only partially reflects evidence-based

learning principles, and as such, the pedagogical potential of digital flashcards remains to be

fully realised.

ARTICLE HISTORY

Received 20 December 2021

Accepted 22 March 2022

KEYWORDS

Digital flashcards; online

learning technologies;

distributed practice; retrieval

practice; self-regulated

learning

Today’s students have access to a wide array of educational

technologies, including modern implementations of tra-

ditional learning tools. For instance, digital flashcards (also

called computer flashcards, electronic flashcards, or virtual

flashcards) duplicate the functions of conventional paper

flashcards (i.e., index cards that typically contain related

information on each side of the card, such as a concept

and its explanation or a practice problem and its worked

answer), including the ability to engage in self-testing by

using one side as a prompt to recall information on the

reverse side. However, unlike paper flashcards, digital flash-

cards are typically created, stored, and used via websites,

smartphone apps, and/or other programmes. Digital flash-

cards also offer more functions than paper flashcards,

including greater control over one’sprogressduringlearn-

ing, the ability to present different types of materials (e.g.,

multimedia), options to configure the order and frequency

of flashcards (e.g., using spaced repetition algorithms), easy

removal or retention of individual cards for further study

(via “dropping” or “starring” functions), built-in games,

greater varieties of practice questions, and more.

Since their commercial debut over two decades ago

(e.g., Texas Instruments, 2001), digital flashcards have

become increasingly popular and are now used across a

wide range of devices. More than a dozen systems are

available (“List of flashcard software,” 2021), and many

continue to add features. For instance, one of the most

popular digital fl ashcard services, Quizlet, boasts over 50

million active users per month (Glotzbach, 2019) and has

expanded from a solely web-based platform to both

web-based and mobile applications, plus added games

and different usage modes. There is little doubt that

digital flashcards will, over time, continue to gain even

more users and become increasingly differentiated from

paper flashcards.

Given their growing complexity, the use of digital flash-

cards to engage in self-regulated learning – that is, when an

individual manages their learning entirely, starting from

the planning of learning activities and extending

through evaluating the effects of those activities (Bjork

et al.,

2013;

Winne & Hadwin, 1998) – presents an increas-

ingly challenging set of decisions for many students. Stu-

dents must decide which platform to use, which

materials to learn, and how to make or obtain flashcards.

After the digital flashcards are ready, they must decide

which learning activities to engage in – for instance, self-

© 2022 Informa UK Limited, trading as Taylor & Francis Group

CONTACT Inez Zung [email protected] Department of Psychology, University of California, 9500 Gilman Drive, La Jolla, San Diego, CA 92093-0109,

USA; Steven C. Pan

[email protected] Department of Psychology, Faculty of Arts and Social Sciences, College of Humanities and Sciences, National

University of Singapore, 9 Arts Link, Singapore, 117572, Singapore

Inez Zung and Steven C. Pan changed institutional affiliations from UCLA to their current institutions in the duration of this project, which is reflected in

their listed affiliations above.

MEMORY

https://doi.org/10.1080/09658211.2022.2058553

testing, games, or simply reading or reviewing. They must

also decide the timing, quantity, frequency, and setting of

flashcard use (e.g., two weeks before an exam, large sets or

small sets, daily or weekly, during their commute, etc.).

Finally, as they cycle through each flashcard, students

may choose whether to turn the flashcard around and

view the reverse side (which, if self-testing is used, pro-

vides an opportunity to check the accuracy of one’s

responses) or simply move on, and if starring or dropping

functions are available, whether to prioritise some flash-

cards and/or drop others from further study. Importantly,

given that research into digital flashcards is still in its

infancy (cf. Altiner, 2019; Dizon & Tang, 2017; Hung,

2015; Sage et al., 2016, 2019), relatively few evidence-

based recommendations for their use currently exist, and

as such, students often have to rely on little more than

intuition in making such decisions. Further, although a

recent review of flashcard programmes suggest that they

may facilitate effective learning strategies such as succes-

sive relearning (a combination of retrieval practice and

spacing; Dunlosky & O’Brien, 2020), researchers have yet

to thoroughly investigate how students actually use

these programmes.

Of the many ways that digital flashcards can be used,

which do college students, whom are among the most

common users of such flashcards, tend to prefer? Why do

they prefer doing so? Are these preferences stable or

altered by metacognitive judgments or study contexts?

We addressed these questions by conducting the first-

ever large-scale survey of digital flashcard use at a major

public university. Our survey explored such issues as how

digital flashcards are made or obtained (e.g., types of

content; self-made vs. pre-made flashcards), how students

use and practice with digital flashcards (e.g., using dropping

functions; types of learning activities), potential uses of

digital flashcards with peers, and whether common usage

patterns align with four potentially effective ways to use

flashcards, all of which are rooted in evidence-based learn-

ing research (Dunlosky et al., 2013; Pan & Bjork, 2022;Wein-

stein et al., 2018): self-testing, correct answer feedback,

spacing out learning, and generating answers.

Potentially effective uses of flashcards for learning

Educators and researchers often recommend using flash-

cards for learning because they can facilitate beneficial

self-testing (e.g., Smith & Weinstein, 2016), which capita-

lises on the well-established retrieval practice effect. The

retrieval practice effect, also known as the testing effect,

is the phenomenon wherein taking a memory test on

some material improves long-term retention of that

material relative to non-retrieval-based methods such as

restudying (e.g., Bjork, 1975; Carrier & Pashler, 1992; Roedi-

ger & Karpicke, 2006). Although the retrieval practice litera-

ture does not focus on flashcards per se, it is plausible that

its findings can be extrapolated to flashcard use given that

the retrieval practice effect is robust across different test

formats and methods of engaging in self-testing (e.g.,

Rowland, 2014). Surveys of paper flashcard use indicate

that self-testing is a common activity among students

when using this study tool (Wissman et al., 2012). The

present survey investigated whether similar patterns

occur for digital flashcards.

Beyond the retrieval practice effect, flashcards can be

also used to confer the benefits of correct answer feedback.

Given that flashcards typically contain a cue on one side

and a definition or answer on the reverse side, students

can attempt to productively retrieve the information

cued by the front of the flashcard before flipping it over

to receive feedback. The retrieval practice effect is

enhanced by correct answer feedback (e.g., Pan, Hutter,

et al., 2019; for review see Rowland, 2014), as it can help

learners to maintain their own correct response (Butler

et al., 2008) or adjust their response if they made errors

(Kang et al., 2007). However, surveys of paper flashcard

use suggest that about one-third of students infrequently

check the back of their flashcards after testing themselves

(Wissman et al., 2012), and in one empirical study, students

even dropped

flashcards

from study after no correct retrie-

vals if they felt so unduly confident in their retrieved

response that they declined to check the back of the

flashcard (Kornell & Bjork, 2008). The present survey

explored the possibility that feedback may also be under-

utilised among users of digital flashcards.

Another potentially effective way to use flashcards is by

spacing out study sessions and items. The spacing effect

refers to the retention advantage when learning events

are spaced apart in time as compared to when they are

massed together (e.g., Cepeda et al., 2006; Donovan &

Radosevich, 1999). Spacing with digital flashcards has

been shown to enhance learning: Kornell (2009) investi-

gated spacing both within and between learning sessions

when using a web-based study programme to learn voca-

bulary word-pairs. In the first experiment exploring within-

session spacing, participants studied one large set of flash-

cards (spacing) or four smaller sets (massing), with the

number of repetitions for each item across conditions

held constant. Those in the spacing condition performed

better on a final cued recall test than those in the

massing condition. A second experiment added

between-session spacing by having participants study

across four days, either by using the large set twice each

day (spacing) or using one small set eight times each

day (massing), and the addition of between-session

spacing enhanced the spacing effect. This benefitof

spacing held even when all participants were given a

final review session during which both conditions restu-

died all the word-pairs twice. Kornell’s findings provide

potent evidence for how flashcards might be used to

implement spacing: Students can use larger flashcard

sets rather than splitting items into smaller sets, and do

so across multiple days. They might also avoid dropping

flashcards from further study, thereby maintaining the

spacing between items and strengthening learning via

2 I. ZUNG ET AL.

additional practice. However, students do not typically

capitalise on the potential benefits of spacing when

using paper flashcards, as they often prefer to use

smaller flashcard sets when studying and believe that

smaller sets are better for learning than larger flashcard

sets (Wissman et al., 2012). (For a more detailed discussion

and comparison of implementing successive relearning in

different flashcard programmes, see Dunlosky & O’Brien,

2020). In the present survey, we addressed whether

similar patterns occur for digital flashcards, and moreover,

whether common digital flashcard features are used in

ways that promote or do not promote spaced learning

opportunities.

Finally, flashcards can be used to capitalise on the

benefits of generation. The generation effect refers to the

finding that material that is generated (e.g., producing syno-

nyms) is better remembered than material that is simply

read (e.g., Bertsch et al., 2007; Slamecka & Graf, 1978). This

effect has been demonstrated with word pairs and frag-

ments (e.g., Gardiner, 1988; Jacoby, 1978)aswellasmore

educationally relevant materials such as outlines and

study questions (e.g., Foos et al., 1994). The generation

effect holds even when students are asked to generate

answers to mathematical operations with the answer

present for reference (Crutcher & Healy, 1989). Generation

can occur with flashcards if students adapt information

from their notes or textbook to put on their own cards –

that is, when students self-generate flashcard content. In a

recent series of experiments, Pan et al. (2022) found that

learners exhibited better memory and transfer performance

when asked to create digital flashcards before using them,

as opposed to using already pre-made digital flashcards.

The present survey addressed whether students are

willing to generate their own digital flashcards or are

deterred by the time and effort needed to do so.

Potential factors influencing digital flashcard use

Flashcards are often used in informal, self-regulated set-

tings outside of the classroom, where choices that result

in ineffective or inefficient learning are common (e.g.,

Bjork et al., 2013; Dunlosky et al., 2013). According to

the desirable difficulties framework (Bjork, 1994), some

learning activities (e.g., retrieval practice) slow immediate

increases in performance and appear to reduce learning

during acquisition, but paradoxically improve learning

over the long-term, whereas other learning activities

(e.g., restudying) appear to ease the acquisition of new

information but may not be effective over the long-

term. As students often conflate learning and perform-

ance (Soderstrom & Bjork, 2015), they may opt for learn-

ing activities and methods that increase short-term

performance rather than durable learning. Students’ pre-

ference for smaller sets of paper flashcards (despite

knowing that spaced study is better than massed

study, as in Wissman et al., 2012) is consistent with

that account, and similar patterns might also be

observed with digital flashcards. Other factors that may

lead to ineffective uses of digital flashcards include inac-

curate metacognition even when holding accurate

knowledge about principles of learning, a lack of knowl-

edge about and experience with managing one’s own

learning, and individual differences in academic ability

(which are the basis for a series of exploratory analyses

discussed later in this manuscript), among other

characteristics.

Some features unique to digital flashcards may also

influence whether students make effective learning

decisions and/or engage in successful learning activities.

Common such features are summarised in Table 1.On

the positive side, digital flashcard platforms can be

designed or configured to, for example, force users to

check the backs of their flashcards after attempting

retrieval (e.g., Anki). Some flashcard services also capita-

lise on the spacing effect by integrating algorithms that

take the decision-making power away from users,

thereby forcing items to be revisited regardless of

whether a user feels they have learned them adequately.

In addition, dropping flashcards can be prevented by

design in digital formats, which could bene fit learning

by enforcing repeated testing and inter-item spacing.

On the negative side, however, if the aforementioned

features are not enabled, then users may not experience

any of the associated benefits. Moreover, some digital

flashcard features, such as dropping functions, could

be used to prematurely terminate the use of specific

flashcards,

which may reduce learning. Finally, the wide-

spread availability of pre-made digital flashcards could

also deprive users of the potential benefits of generating

their own flashcards. To address these considerations,

the present survey investigated potentially productive

and unproductive uses of common digital flashcard

features.

More generally, digital flashcards may have some

inherent advantages over paper flashcards that help

users learn more effectively. For example, Ashcroft et al.

(2016) found that Japanese university students learning

Table 1. Potential impacts of common digital flashcard features on student

learning.

Feature Potential impact on learning

Ability to “flip” flashcards Opportunities for retrieval practice, correct

answer feedback

Dropping functions Reduces opportunities for spacing

Making one’s own

flashcards

Opportunities for generation

Multimedia presentation Opportunities for dual coding

Required checking of

feedback

Opportunities for correct answer feedback

Required revisiting of

cards

Opportunities for spacing

Shuffling capability Opportunities for varied practice

Starring functions Opportunities for spacing, additional learning

of specific cards

Using pre-made

flashcards

Reduces opportunities for generation

MEMORY 3

English as a second language exhibited greater vocabulary

learning gains after using digital flashcards compared to

using paper flashcards, and suggested that the digital

format may have benefited these students more due to

the greater variety of activities offered by the flashcard

service used in the experiment (Quizlet) and the greater

level of control that the service exerted over participants’

learning activities. All of these digital flashcard features

may have aided in sustaining engagement and motiv-

ation. However, whether such benefits of digital flash-

cards generalise to other materials remains to be

determined (for other comparisons of digital versus

paper flashcards, see Dizon & Tang, 2017; Sage et al.,

2016; Sage et al., 2019), and more broadly, how digital

flashcard platforms may support self-regulated learning

in ways that paper flashcards cannot remains to be deter-

mined. Accordingly, the present survey included ques-

tions comparing beliefs and practices pertaining to

digital versus paper flashcards.

Method

Participants

During the survey period, which began on 23 September

2020, and closed on 30 January 2021, 988 undergraduate

students from the subject pool at the University of Cali-

fornia, Los Angeles (UCLA) accessed the survey. Of

those students, 901 respondents (81%, n = 729 women;

18%, n = 160 men; 0.67%, n = 6 non-binary; 0.11%, n =1

pangender; 0.22%, n = 2 other; 0.33%, n = 3 chose not

to respond) completed the survey in its entirety and

received partial course credit. We did not explicitly

collect data on year at UCLA; however, the age break-

down of participants suggests that approximately 64%

of the sample was likely underclassmen and 36% of the

sample was likely upperclassmen (M

age

= 20.1 years).

Some (4.4%, n = 40) of respondents reported majors in

the humanities (e.g., linguistics, English), 7.3% (n = 60) in

the social sciences (e.g., political science, education,

public affairs), 72.5% (n = 653) in the life sciences (e.g.,

biology, psychology), 4.3% (n = 39) in the physical

sciences (e.g., chemistry, mathematics), 0.7% (n =6) in

engineering (e.g., bioengineering, computer science),

and 0.9% (n = 8) in visual and performing arts (e.g., film,

dance). Nineteen respondents (2.1%) reported their

major as undeclared, 0.7% (n = 6) as other, 6.8% (n = 61)

as two or more, and 0.3% (n = 3) chose not to respond.

A full list of majors represented among the sample is at

this study’s Open Science Framework (OSF) repository.

Additionally, less than 1% (0.44%, n = 4) of respondents

identified as American Indian or Alaska Native, 48% (n

= 429) as Asian, 3% (n = 28) as Black or African American,

12% (n = 105) as Hispanic or Latinx, 4% (n = 38) as Middle

Eastern, 25% (n = 222) as white, 7% (n = 64) as two or

more races/ethnicities, 0.67% (n = 6) as other, and

0.55% (n = 5) chose not to respond. The survey was

approved by UCLA’s Institutional Review Board and admi-

nistered online.

Materials

We developed a 47-question survey to investigate the

prevalence and characteristics of digital flashcard use

among college students. It encompassed three broad cat-

egories of interest:

1. How students make and/or obtain digital flashcards,

and their reasons for doing so;

2. How students use digital flashcards to support their

learning; and:

3. How students’ practices and attitudes compare

between digital and paper flashcards.

The survey was developed (a) in consultation with pre-

vious surveys addressing digital learning tools (e.g., Dor-

nisch, 2013), (b) by adapting items from a prior survey on

how and when students engage in self-testing with flash-

cards in general (Wissman et al., 2012),

1

(c) drawing on

the experiences of the first author and anecdotes soli-

cited from other undergraduates, and (d) considering

the four evidence-based methods outlined in the Intro-

duction. Prior to data collection, the questions were eval-

uated in a pilot test involving five UCLA undergraduate

students, which informed minor adjustments to clarify

wording and answer options. At the conclusion of the

survey, we also included nine questions addressing

general study and technology habits; the results from

those questions can be found at this study’s OSF

repository.

The survey was programmed in Qualtrics (Qualtrics,

Provo, UT) and could be accessed via any internet

browser. The survey questions were ordered from

general to specific, grouped by topic, and preceded by a

series of demographic questions. A definition of “digital

flashcards” was also presented at the start of the survey

to provide relevant context:

Digital flashcards are similar to paper flashcards. Such cards

typically have a “front” and “back” wherein related information

is presented. However, digital flashcards are created, stored,

and used through digital means (e.g., accessed on a computer,

phone, or tablet application, or on a website) rather than on

physical cards.

Respondents were permitted to decline answering any

question except those that determine survey flow (e.g.,

respondents indicating that they had not ever used

paper flashcards were automatically excluded from ques-

tions addressing the use of paper flashcards).

The majority of the survey questions involved multiple-

choice (i.e., select the best-fitting answer option) or mul-

tiple-selection format (i.e., select all applicable options).

Questions addressing the frequency of certain behaviours

featured five answer options (always, often, sometimes,

4 I. ZUNG ET AL.

rarely,andnever), whereas questions addressing beliefs or

behaviours included answer choic es specific to the question

and, in most cases, the option to specify an “other” response

(cf. Pan et al., 2020). The remainder of the questions were

open-ended. These questions involved a numerical open-

response format (i.e., input a number) or an open-text

response format (i.e., respond in 1–2sentences).

Scoring

Data from the multiple-choice and multiple-selection

questions were tabulated within Qualtrics, with no

further scoring necessary. Coding keys (each containing

10–20 categories) for the open-text response questions

were generated by the first two authors from examination

of themes from a subset of responses to each question. All

open text-response questions were scored by two inde-

pendent raters using coding keys. Because the coding

keys were designed to allow raters to categorise each

response with more than one code, interrater percentage

agreement (see McHugh, 2012 for discussion on percen-

tage agreement and chance agreement) was calculated

using fractions in place of Cohen’s kappa (which is not

well-suited to accurately capture interrater reliability in

cases wherein multiple answers can be coded for a

single survey item; see Cohen, 1960). An interrater score

of 0 indicated total agreement between raters (e.g., Rater

1: 6; Rater 2: 6), a score of 1 indicated total disagreement

(e.g., Rater 1: 4; Rater 2: 5), and a fractional score indicated

partial agreement (e.g., Rater 1: 4, 5, 7; Rater 2: 4, 6, 7

results in a score of 0.5). As the interrater percentage

agreement for the fi rst 200 responses for each question

was acceptable (> 80%), all remaining responses were

each coded by a single rater.

Procedure

Respondents accessed the survey link via the subject pool

website (https://ucla.sona-systems.com/) and completed

the survey on their personal laptop, computer, or other

digital device. After giving consent to participate in the

study, respondents were directed to answer each question

as honestly as possible and then proceeded to the survey

questions. There was no set time limit, but the average

survey completion time was 20.9 min.

2

Results and discussion

We first report general findings for digital flashcard use

among college students, followed by findings pertaining

to the aforementioned three broad categories of interest,

and then a set of exploratory correlational analyses.

Major findings are summarised in the text, with further

details included in accompanying tables and/or figures.

In the tables, for questions marked with

m

, respondents

could select more than one answer option; for questions

marked with

#

, numeric responses were given; and

questions marked with

f

were open-ended and were typi-

cally answered in 1–2 sentences. The order of the ques-

tions in the tables generally mirrors that of the actual

survey with one prominent exception: For ease of expo-

sition, questions that only targeted paper flashcard use,

which duplicate those originally used in Wissman et al.

(2012), are presented in the Appendix. Additionally, in

several cases we report the total percentage of respon-

dents that selected any of several response options, each

of which is shown in the tables.

General characteristics of digital flashcard use

among college students

As detailed in Table 2, digital flashcards are a widely known

and remarkably popular learning tool among college stu-

dents. Over three-quarters of respondents (n = 701,

77.8%) reported using digital flashcards, and of those

respondents, well over half (63.9%) reported using digital

flashcards at least sometimes during their learning activi-

ties. (Unless noted otherwise, the remainder of reported

results involve the 701 respondents who answered that

they have used digital flashcards.)

Although a variety o f online flashcard programmes or ser-

vices are used, the dominance of one platform, at least cur-

rently, is evident: Nearly all respondents (99.3%) had

experience with Quizlet, andthevastmajority(89.7%)

reported most frequently using that platform. The next

most popular flashcard platform, Anki, is far less commonly

used (just 5.8% reported most frequently using it). Respon-

dents offered many reasons for their choice of platform,

with the three most common being ease of access (31.4%),

familiarity (27.2%), and the availability of pre-made

flashcard sets (21.0%). Digital flashcards are also most com-

monly used on a computer or laptop (70.1%), followed by

smartphones (25.7%). Tablets were rarely used (4.0%).

The subject areas that digital flashcards are most fre-

quently used for are science, history, social sciences, and

foreign languages (see Table 2, Question 7 for rankings).

In terms of content, digital flashcards are overwhelmingly

used to learn vocabulary (93.9%), followed by key facts

such as names or dates (74.6%) and concepts (70.2%).

Using digital flashcards to learn more complex material

(e.g., worked examples) is relatively rare (8.4%). Thus, repli-

cating patterns that have been observed with paper fl ash-

cards (e.g., Wissman et al., 2012 ), digital flashcards tend to

be used for relatively unsophisticated content.

Importantly, students tend to regard digital flashcards

as helpful: An impressive 92.4% regard such flashcards as

at least moderately helpful for studying or learning.

How college students make and/or obtain digital

flashcards

Creating and obtaining digital flashcards

As detailed in Table 3, respondents more commonly

reported using copy-and-paste functions (36.7%) or

MEMORY 5

Table 2. General characteristics of digital flashcard use.

1. Prior to taking this survey, have you ever heard of digital flashcards before? (n = 901)

Response Frequency

Yes 85.0%

No 15.0%

2. Have you ever used digital flashcards before? (n = 901)

Response Frequency

Yes 77.8%

No 22.2%

3. In any of your studying or learning activities, do you use digital flashcards? How often do you use them? (n = 701)

Response Frequency

Always 2.1%

Often 18.0%

Sometimes 43.8%

Rarely 34.2%

Never 1.9%

4. Which, if any, of these digital flashcard services have you used? (n = 701)

m

Service Frequency

Anki 15.1%

Brainscape 4.6%

Cram 0.7%

Quizlet 99.3%

Studyblue 5.6%

Study Stack 2.3%

Other 1.0%

5a. What digital flashcard service do you use the most? (n = 701)

f

Service Frequency

Anki 5.8%

Brainscape 0.1%

Quizlet 0.0%

StudyBlue 89.7%

Other 0.3%

Response left blank 2.0%

5b. What is the primary reason for your use of this digital flashcard service as opposed to other services? Please answer in 1–2 sentences. (n = 701)

f

Response category Frequency

Platform is free 6.8%

Platform is popular / peers or friends use same platform 16.8%

Instructors use platform 6.4%

Platform is the only one participant is familiar with (e.g., used or heard of) 27.2%

Ease of access (e.g., platform is user friendly, easy to use, convenient/accessible) 31.4%

Platform features 13.6%

Sharing 2.6%

Images 0.1%

Sound 0.1%

Games 2.7%

Participant is most comfortable/familiar with this platform 13.1%

Platform has pre-made sets 21.0%

Platform is easier to use/cheaper than paper flashcards 1.6%

Using the platform has led to positive results in the past 3.4%

Platform allows you to test yourself 6.8%

Saves paper/environmentally friendly 1.4%

Other 7.7%

Response left blank 1.9%

6. Of the time you spend using digital flashcards, what percentage of time do you use them on the following devices? Please put 0 if you do not use digital

flashcards on that device. Note: These numbers should add up to 100%. (n = 701)

#

Device Mean percent of time

Computer/laptop 70.1%

Phone 25.7%

Tablet 4.0%

Other 0.2%

7. What subject areas do you tend to use digital flashcards for? Please put the subject areas in order of how often you use digital flashcards for them, from

most to least often. (n = 701)

Subject area Ranking (1–3, most to least often)

1st 2nd 3rd

Math 0.1% 5.6% 7.3%

Science 39.4% 21.5% 17.3%

History 12.6% 25.1% 28.0%

English composition / writing 2.4% 4.4% 5.8%

Foreign languages 21.7% 18.0% 16.7%

Social sciences 22.0% 24.1% 21.3%

Performing arts 0.0% 0.1% 0.6%

Other 1.4% 0.9% 2.3%

6 I. ZUNG ET AL.

direct transcription (36.4%) to place information on (self-

made) digital flashcards, rather than typing the infor-

mation in their own words (26.8%). Pre-made digital

flashcards were most commonly obtained via a web

search (86.2%), with other, potentially more trustworthy

sources of digital flashcards (e.g., “Friends studying for

8. What type of information is typically on your digital flashcards? (n = 701)

m

Information type Frequency

Concepts (e.g., equilibrium in science, interdependence in history) 70.2%

Equations/formulas 40.9%

Key facts (dates, names, etc.) 74.6%

Practice questions 37.8%

Vocabulary 93.9%

Worked examples 8.4%

Other 1.1%

9. In your opinion, how helpful are digital flashcards for your studying/learning? (n = 701)

Response Frequency

Not at all helpful 0.4%

Slightly helpful 7.1%

Moderately helpful 31.1%

Very helpful 46.1%

Extremely helpful 15.3%

Table 3. How pre-made and self-made digital flashcards are created or obtained, and why.

1. Please indicate how often you use the following methods to place information on your digital flashcards. Note: These numbers should add up to 100%.

(n = 701)

#

Method Mean percentage of time

Copy and paste 36.7%

Directly transcribe (e.g., type out word for word) 36.4%

Type up in my own words 26.8%

2. If you use pre-made digital flashcard sets, where do they come from? (n = 701)

m

Response Frequency

Friends studying for the same exam/class 47.2%

Friends who have taken the class previously 31.2%

Web search 86.2%

Other 4.0%

I do not use pre-made digital flashcard sets 5.1%

3. Do you ever put images on your digital flashcards, or use digital flashcards that have images on them? (n = 701)

Response Frequency

Yes, I make my own images 0.4%

Yes, I use pre-made images from other sources 31.5%

Yes, I use both my own and pre-made 8.6%

No 55.9%

The digital flashcard platform I use does not support images 3.6%

4. Of self-made and pre-made digital flashcard sets, what percentage of the time do you use each type of flashcards? Note: These numbers should add up to

100%. (n = 701)

#

Digital Flashcard Type Mean Percentage of Time

Pre-made 56.0%

Self-made 44.0%

5. If you choose to use pre-made digital flashcard sets rather than make your own digital flashcard sets, why do you do so? (n = 701)

m

Motive Frequency

I don’t have the time to make my own flashcard sets 70.2%

I trust the information on pre-made flashcard sets 37.4%

Pre-made flashcard sets are easily available 81.3%

Pre-made flashcard sets are higher quality 9.4%

Pre-made flashcard sets are more accurate 7.4%

Pre-made flashcard sets contain practice questions 34.7%

Other 2.3%

I do not use pre-made digital flashcards 5.7%

6. If you choose to make your own digital flashcards, rather than use pre-made digital flashcard sets, why do you do so? (n = 701)

m

Motive Frequency

I can control the information that goes on the card 76.2%

I enjoy making my own flashcard sets 16.3%

The act of making flashcards helps me learn the material 66.0%

The act of making flashcards helps me know what to study 44.8%

The fl ashcard sets I make are more accurate 40.5%

The fl ashcard sets I make are higher quality 23.7%

There are no pre-made flashcard sets for the material I need 37.8%

Other 2.0%

I do not make my own digital flashcards 10.4%

MEMORY 7

the same exam/class” or “Friends who have taken the class

previously”) endorsed less often (≤ 47.2%). Additionally,

55.9% of respondents reported not using or not making

digital flashcards with images, which suggests that most

users of digital fl ashcards are not using their multimedia

capabilities to their full extent.

Preference for pre-made versus self-made digital

flashcards

Pre-made digital flashcardsaremorecommonlyused

than self-made digital flashcards: Of the total time

spent using digital flashcards, respondents reported

spending,onaverage,56.0%oftheirtimeusingcards

made by someone else and 44.0% of their time using

self-made flashcards. Contrasting rationales were

offer ed for t h e choice of one flashcard t ype o ver the

other. Of the respondents that chose to use pre-made

digital flashcards, most (81.3%) did so because they

were easily available, followed closely by not having

enough time to make their own digital flashcards

(70.2%). Few indicated tha t they chose to use pre-

made digital flashcards because they were of higher

quality or more accurate than sets that they generated

themselves (≤ 9.4%). Of those that chose to use self-

made digital flashcards, however, most (76.2%) reported

doing so because of the ability to control the infor-

mation placed on the card, followed clo sel y by the

belief that the act of making flashcards served as a learn-

ing opportunity (66.0%). Moreover, 64.2% of s elf- made

flashcard users did so because of the purported bett er

accuracyorhigherqualityofsuchflashcards over pre-

made versions that could be found online.

Overall, the forgoing results suggest that although

college students tend to rely more on pre-made digital

flashcards, they more frequently doubt their accuracy as

compared to self-made flashcards. Indeed, as shown in

Figure 1, when asked to rate the accuracy of pre-made

and self-made digital flashcards, more respondents rated

self-made flashcards as very or extremely accurate

(87.3%), whereas fewer rated pre-made flashcards in the

same way (38.0%).

How college students use digital flashcards

As detailed in Table 4, of the total time spent using digital

flashcards, more respondents reported spending time

practising recall or self-testing (58.5%) than studying, rest-

udying, reading, or rereading (41.5%) – that is, using retrie-

val practice as opposed to less effective methods

(Dunlosky et al., 2013; Pan & Bjork, 2022). As for when stu-

dents use digital flashcard sets, few respondents reported

spacing out their digital flashcard use throughout an aca-

demic term. Instead, digital flashcard use was closely tied

to exam date: Roughly equal percentages of students

reported that they gradually increase their use of digital

flashcards as the exam approaches (28.4%), begin to use

digital flashcards in the week of the exam (35.1%), or,

perhaps most concerning, tend to use digital flashcards

just in the day or two before an exam (29.7%). College stu-

dents therefore do not appear to take advantage of oppor-

tunities to engage in distributed practice, or spacing,

between their study sessions when using digital flashcards.

Although there was a nearly equal split between respon-

dents who reported using digital flashcards in one large set

(49.2%) versus several smaller sets (50.8%), the mean

reported number of cards in each set for the top three

subject areas in which digital flashcards were used (as pre-

viously discussed) decreased by ranking but was still rela-

tively high overall (mean of 76.8 cards for the first ranked

subject, 55.5 for the second, and 45.1 for the third).

Use of digital flashcard features

Most respondents (86.5%) reported at least occasional use

of shuffle features that enable randomisation of the order

in which flashcards appeared. Most respondents (≥ 84.0%)

also reported using digital flashcard platforms that

enabled them to change how many times a card appears

by “starring” or marking it as “study later,” and/or gave

them control over when they could drop (i.e., remove

from further study) cards. However, whereas most respon-

dents did make use of “starring” or “study later” functions

(63.9%), nearly half did not endorse using drop functions

(49.8%). (It should be emphasised that the use and avail-

ability of “starring” or “study later” functions can occur

independently of drop functions.) This result suggests

that college students are (intentionally or inadvertently)

taking advantage of spacing between specific items, and

consequently receiving greater chances for additional

practice by keeping all the cards in a deck during study

sessions (i.e., students may be engaging in spacing

between repetitions of a card, even if they are not taking

advantage of spacing their study sessions). Further, even

when digital flashcards were dropped, most respondents

(69.0%) reported at least sometimes revisiting those

flashcards.

Amount of practice

The choice of the number of times to study each digital

flashcard was most commonly made using a mixture of

Figure 1. Perceived accuracy of self-made and pre-made digital flashcards.

Note: Respondents gave separate accuracy ratings for self- and pre-made

digital flashcards on a five-level scale.

8 I. ZUNG ET AL.

Table 4. How digital flashcards are used.

1. When using digital flashcards, what percentage of time are you engaging in the following activities? Note: These numbers should add up to 100% (n =

701)

#

Activity Mean percentage of time

Practising recall (self-testing) 58.5%

Studying, restudying, reading, or rereading 41.5%

2. Which of the following best describes how you tend to use digital flashcards? (n = 701)

Response Frequency

Spaced out (i.e., spread out) throughout the quarter 6.8%

A day or two before the exam 29.7%

Increasing gradually in frequency as the exam approaches 28.4%

The week of the exam 35.1%

3. In general, do you prefer to study digital flashcards as one big set or do you prefer to study digital flashcards in a series of smaller sets? (n = 701)

Response Frequency

I prefer to study one big set 49.2%

I prefer to study several smaller sets 50.8%

4. How many cards, on average, are in your digital flashcard sets for the [top three subjects that you use digital flashcards for]? (n = 701)

#

Ranking Mean number of cards

1st 76.8

2nd 55.5

3rd 45.1

5. How often do you shuffle your digital flashcard sets? That is, change the order in which those cards are viewed/used? (n = 701)

Response Frequency

Always 31.8%

Often 33.2%

Sometimes 21.4%

Rarely 9.3%

Never 4.3%

6. When you study with digital flashcards, which of the following items best describes how the decision to stop studying a card occurs? (n = 701)

Response Frequency

The digital flashcard programme has complete or most control. 23.0%

You (the learner) and the programme have equal. 35.4%

You have complete or most. 41.7%

7. Do you use any digital flashcard features that allow you to change how many times a card appears or when to stop studying a card (i.e., “starring” or

“study later” functions)? (n = 701)

Response Frequency

Yes, I have used such functions when available 63.9%

No, I have not used such functions when available 26.1%

No, such functions have not been available 10.0%

8. Do you use any “drop” (remove from further study) functions when studying with digital flashcards? (n = 701)

Response Frequency

Yes, I have used such functions when available 34.2%

No, I have not used such functions when available 49.8%

No, such functions have not been available 15.8%

Response left blank 0.1%

9. How often do you go back to the digital flashcards that you dropped or did not mark for further study? (n = 701)

Response Frequency

Always 8.6%

Often 17.4%

Sometimes 43.1%

Rarely 21.7%

Never 7.1%

Response left blank 2.1%

10. Imagine that you are learning with a set of digital flashcards. Which of the following would influence how many times you studied a card? (n = 701)

m

Response Frequency

How easily you could remember the card 75.9%

How long you planned to study that day 17.0%

How many times you could correctly recall the card 74.5%

The importance of the particular card 45.6%

Other 0.1%

11. Imagine that you are learning with a set of digital flashcards. How do you decide when you have studied a digital flashcard sufficiently and DO NOT

need to study it again? (n = 701)

m

Response Frequency

I felt like I immediately knew the correct answer 66.2%

I recalled the content on the flashcard correctly one time 7.1%

I recalled the content on the flashcard correctly more than once 69.5%

I recalled the content on the flashcard quickly 47.2%

I understood the information 55.2%

Other 0.7%

12. In general, how many times do you successfully recall the information on the “back” of a DIGITAL flashcard before you drop the DIGITAL flashcard from

study? (Note: your answer needs to be a number or “N/A”.) (n = 701)

#

Response Frequency*

0 0.1%

1 0.6%

2 7.8%

MEMORY 9

fluency (75.9%) and accuracy (74.5%). Respondents gener-

ally chose to stop studying when they had recalled the

information on the flashcard correctly more than once

(69.5%), but they also reported using retrieval fluency as

a cue for when to stop studying with nearly the same fre-

quency (66.2%). That students in the present study

reported correctly recalling items more than once before

dropping them contrasts with lab-based experiments

which find that participants tend to drop items after one

correct recall (e.g., Ariel & Karpicke, 2018; Dunlosky &

Rawson, 2015; Karpicke, 2009; Kornell & Bjork, 2008), but

align with the results of a prior survey on students’

flashcard usage behaviours (Wissman et al., 2012). The

present results may thus have been influenced by differ-

ences in and out of the lab environments (see General Dis-

cussion). Regardless, the endorsement of both studying to

criterion (most commonly 3 or 5 correct recalls of a given

flashcard) and fluency cues demonstrates that although

most students are aware that more practice is helpful (par-

ticularly for self-testing), they also use potentially inaccur-

ate metacognitive judgments based on their own feelings

to regulate their learning.

Use of correct answer feedback

Only 52.8% of respondents reported that they always

check the correct answer on the back of their digital flash-

cards, which mirrors patterns observed with paper flash-

cards (Wissman et al., 2012). Over half of respondents

also reported that they chose not to check because they

were confident that the information that they had

retrieved was accurate (53.5%), with feelings of fluency

as the next most common reason (38.8% endorsing

quick or easy retrieval as their rationale). These results

raise the prospect that students may not be giving them-

selves the feedback necessary for effective self-regulated

learning when using digital flashcards.

Using digital flashcards with peers

As detailed in Table 5, although many respondents

reported sharing their digital flashcards by sending them

to classmates, friends, or study groups ( ≥ 38.5%), most

rarely or do not ever use their digital flashcards with a

study partner (65.9%). Of those who did report using

them with a partner, the most common activity was

taking turns quizzing each other (25.2%). Moreover, the

use of digital flashcards with peers yielded relatively

diverse assessments of impacts on motivation, difficulty,

efficiency, and other characteristics (see Table 5, Question

4 for detailed results).

College students’ practices and attitudes involving

digital versus paper flashcards

As displayed in Table 6, among users of digital flashcards,

the vast majority, 91.6%, reported also using paper flash-

cards. All remaining results in this section are drawn

from those 642 participants. As an average percentage of

total study time, including activities that did not involve

any flashcards, digital flashcards were far more frequently

used (56.6%) than paper flashcards (24.9%). Respondents

also expressed an overall preference for digital flashcards,

with 60.1% preferring them over paper flashcards. The

most commonly stated reason for preferring the digital

medium was the convenience and ease of obtaining and

2.5** 0.3%

3 23.7%

3.5** 0.1%

4 6.7%

5 18.0%

5.5** 0.1%

6 1.1%

7 2.1%

8 1.7%

10 5.4%

N/A 25.1%

13. When learning with digital flashcards, how often do you check whether the information that you recalled is correct by looking at the “back” of the

flashcard? (n = 701)

Response Frequency

Always 52.8%

Often 36.1%

Sometimes 9.8%

Rarely 1.1%

Never 0.1%

14. If you ever choose NOT to check if the information you recalled is correct by looking at the back of the digital flashcard, what contributes to your

decision? (n = 701)

m

Response Frequency

I was confident that the information I retrieved was accurate 53.5%

I knew I was going to go back and study that information again 9.1%

I retrieved the information quickly 18.0%

I retrieved the information easily 20.8%

Other 0.4%

I always check 45.6%

Note: (*) Ten (1.43%) responses were not included because respondents listed percentages, and another 36 (5.14%) responses were not included because

respondents listed a number between 15 and 200. (**) Some respondents gave ranges (e.g., “2–3 times”), which were coded as the median of the range.

10 I. ZUNG ET AL.

using digital flashcards, although some respondents pre-

ferred handwriting and reported that it helped them

learn the material more than digital flashcards did (for

detailed results see Table 6, Question 3b).

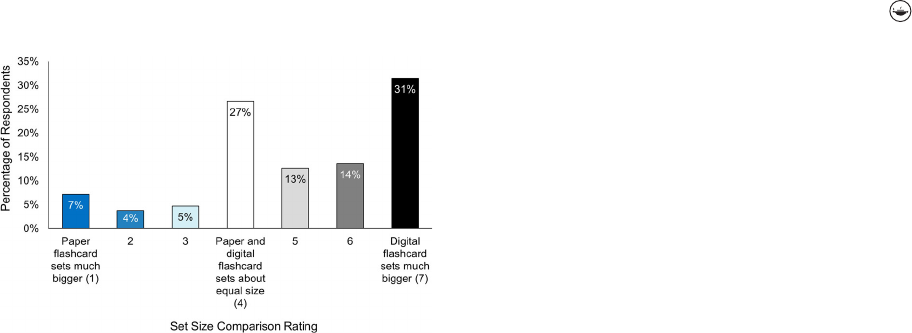

As shown in Figure 2, respondents tended to use

eitherthesamesize(26.6%)ormuchlargerdigital

flashcard sets (31.5%) than paper ones. That result may

speak to the convenience of using digital flashcards,

and specifica lly the gre ater ease of crea ting and st oring

a l ar ge set of dig ital flashcards than doing the same

with paper versions. It also suggests that digital flash-

cards may facilitate greater use of within-session

spacing. In addition, respondents reported that they

were more likely to drop paper fl ashcar ds (35.5% ) than

digital flashcards (22.9% ) – in fact, twice the number of

respondents (16.8%) r eported that they were much

more likely to drop paper flashcards than digital flash-

cards than vice versa ( 8.9%). That finding further suggests

that digital flashcard sets may be more conducive to

achieving inter-item spacing within single study sessions.

With respect to the use of feedback, there was little differ-

ence in respondents’ likelihood of checking the back of paper

versus digital flashcards (see Table 6,Question4).Infact,

70.9% of respondents reported that they were equally

likely to check the backs of their digital and paper flashcards.

That high degree of equivalence suggests that the choice to

use correct answer feedback in the context of flashcard learn-

ing is not heavily influenced by medium.

As detailed in the Appendix, respondents’ answers to

other questions addressing paper flashcard use – which

Table 5. Using digital flashcards with peers.

1. Do you share your digital flashcards in any of the following ways? (n = 701)

m

Response Frequency

Send to classmates 38.5%

Send to friends 47.9%

Share with study groups 41.1%

Make available for public use 24.1%

Other 0.6%

I do not share my digital flashcards 33.7%

2. How often do you use digital flashcards with a study partner or friend? (n = 701)

Response Frequency

Always 1.3%

Often 9.6%

Sometimes 23.3%

Rarely 23.7%

Never 42.2%

3. If you do use digital flashcards with a study partner or friend, how do you do so? Please answer in 1–2 sentences. If you DO NOT use digital flashcards

with a study partner or friend, please type “N/A.” (n = 701)

f

Response category Frequency

Take turns quizzing each other 25.2%

Test themselves simultaneously (i.e., both look at same side) 3.9%

Make or create the cards together (or split the work) 4.3%

Send the cards to each other or share the cards and/or links 15.7%

Study or review the cards simultaneously or together 5.4%

Cross-check information with each other’s sets 1.9%

Compare errors and/or explain errors to each other 0.9%

N/A 55.3%

Other 0.6%

Response left blank 0.3%

4. How is your experience of using digital flashcards alone different from your experience using them with a partner? (Assuming you do use flashcards with

a partner; if not, please write “I do not use digital flashcards with a partner.”) Please answer in 1–2 sentences. (n = 701)

f

Response Category Frequency

Partner provides accountability for errors 6.7%

Partner provides greater focus (accountability) 1.6%

Respondent is more motivated to study with a partner 3.6%

Respondent has greater difficulty focusing with a partner 2.7%

Respondent experiences greater pressure with a partner 1.6%

More mental energy required with a partner 0.1%

More time spent per card with a partner 0.1%

Making digital flashcards with a partner is efficient/easier 1.3%

Respondent can proceed at own pace when alone 7.4%

Using digital flashcards alone is more efficient 4.3%

Respondent self-tests when alone instead of testing each other 2.9%

Respondent answers silently when alone instead of aloud 4.4%

Testing is easier with a partner 1.3%

Partner provides more, different, or missing information 3.4%

Partner generates more discussion (rather than pure recall) 5.6%

Partner helps figure out how to remember a card 1.0%

More helpful to use digital flashcards with a partner 3.1%

I do not use digital flashcards with a partner 55.5%

No difference with a partner vs. alone 2.7%

Other 6.4%

Response left blank 0.9%

MEMORY 11

Table 6. Practices and attitudes involving digital versus paper flashcards.

1. Do you use, or have you ever used, paper flashcards? (n = 701)

Response Frequency

Yes 91.6%

No 8.4%

2. Out of all of your learning activities, what percentage involves using digital flashcards? What percentage involves using paper flashcards? Please provide

your best estimate. Note: These numbers do not necessarily need to add to 100. (n = 701)

#

Activity Mean percentage of time

Digital flashcards 56.6%

Paper flashcards 24.9%

3a. Do you prefer paper or digital flashcards? (n = 642)

f

Response Frequency

Paper flashcards 25.7%

Digital flashcards 60.1%

Equal preference 1.4%

Preference depends on situation 4.3%

Response left blank 3.3%

3b. Why do you prefer one over the other? Please explain in 1–2 sentences. (n = 642)

f

Response category Frequency

Digital flashcards are easier to search through 1.9%

Digital flashcards are easier to manage, keep track of, and/or organise 11.8%

Digital flashcards are easier, faster, cheaper, or more convenient to make 25.9%

Digital flashcards have unlimited storage and/or take up less space 6.1%

Digital flashcards are more convenient or almost always accessible 37.9%

Digital flashcards are easier to edit 2.6%

Typing is easier than, faster than, or preferable to handwriting 6.2%

Digital flashcards allow copy-paste functions 2.0%

Digital flashcards are more effective 0.3%

Digital flashcards have pre-made sets available (easier and faster to find) 8.4%

Digital flashcards provide more options or features (e.g., games) 11.7%

Some digital flashcards have pre-determined spaced repetition algorithms 0.6%

Paper flashcards show visual representation of learning (e.g., stack sizes) 1.1%

Easier to draw on paper flashcards 2.8%

Prefer handwriting, which helps with retention 19.6%

Paper flashcards are more effective (“learn more”) 3.7%

Prefer looking at paper over looking at a screen 2.6%

Prefer using something physical 5.8%

Do not know how to make digital flashcards 0.2%

Paper flashcards are wasteful 8.4%

No reason given (only stated preference) 1.9%

Other 10.4%

Response left blank 9.2%

4. Please rate how likely you are to check the back of the flashcards when using paper flashcards compared to using digital flashcards. (n = 642)

Rating Frequency

I am much more likely to check the back of the flashcard when using paper. (1) 6.1%

2 3.7%

3 6.9%

I am equally likely to check the back of paper and digital flashcards. (4) 70.9%

5 5.6%

6 2.6%

I

am much more likely to check the back of the flashcard when using digital. (7) 4.2%

5. Please rate how likely you are to drop paper flashcards relative to digital flashcards. (n = 642)

Rating Frequency

I am much more likely to drop paper than digital flashcards. (1) 16.8%

2 9.5%

3 9.2%

I am equally likely to drop paper and digital flashcards. (4) 41.6%

5 7.2%

6 6.9%

I am much more likely to drop digital than paper flashcards. (7) 8.9%

6. Please rate how often you access paper flashcards compared to digital flashcards in the following situations. (n = 642)

Rating During class While studying While travelling

or commuting

I access paper flashcards much more (1) 7.9% 8.9% 5.6%

2 6.5% 6.2% 5.0%

3 4.4% 4.4% 2.2%

I access both types of flashcards equally (4) 22.6% 16.0% 9.8%

5 6.7% 10.1% 5.9%

6 13.4% 18.1% 15.1%

I access digital flashcards much more (7) 38.5% 36.3% 56.4%

12 I. ZUNG ET AL.

were analogous to questions previously posed for digital

flashcards – resembled their earlier answers (cf. Wissman

et al., 2012). Responses to ques tions addressing types of

learning activities, how many times a flashcard wa s

used, and when to stop using a flashcard were all

similar for paper and digital flashcards. Hence, students’

cues and habits when engaging in self-regulated learning

do not appear to differ in any great way between

mediums.

Exploratory analyses

Why students choose self-made versus pre-made

digital flashcards

To further examine the surprising finding that most

respondents chose to rely on pre-made digital flashcards

despite tending to doubt the accuracy or quality of such

cards, we conducted correlations that related respondents’

frequency of using self- or pre-made digital flashcards with

their reported reasons for doing so (Table 3, Questions 4

and 5). We first categorised particular answer choices in

Question 5 into broad themes of interest as follows: (a)

quality assurance (I can control the information that goes

on the card, The flashcard sets I make are more accurate

than those found online, and The flashcard sets I make are

higher-quality than those found online) and (b) self-made

advantages (The act of making the flashcards helps me

learn the material and The act of making the flashcards

helps me know what I need to study).

A series of correlation s the n relat ed per cen tage of

time using self-made digital flashcar ds with the number

ofanswerschosenfromeachtheme(aandb).Respon-

dents who reported greater time spent using self-made

digital flashcards endorsed more of the answer choices

inthequalityassurancetheme,r (699) = .40, p < .001.

Similarly, respondents who reported greater time spent

using self-made digital flashcards endorsed more of the

answer choices in the self-made advantages theme, r

(699) = .43, p < .001. Endorsement of answer choices

from these two themes was also correlated, r (699)

=.37, p < .001 . Th at is, those who sel ecte d mo re of the

choices in the quality assurance theme also tended to

select more of the choices in the self-made advantages

theme. It is therefore likely that participants who con-

sidered the quality of the digital flashcards they made

themselves were also attuned to the potential benefits

of the action of making them, and vice versa.

Using the same process as with Question 6, we cate-

gorised particular answer choices in Question 5 into the

following broad themes: (a) quality assurance (I trust the

information on pre-made flashcard sets, Pre-made

flashcard sets are higher quality than those I can self-gener-

ate, and Pre-made flashcard sets are more accurate than

those I can self-generate) and (b) efficiency (I don

’t

have

the time to make my own flashcard sets and Pre-made

flashcard sets are easily available). As expected, respon-

dents who reported more time using self-made digital

flashcards endorsed fewer answer choices in the quality

assurance theme, r (699) = -.23, p < .001, and fewer

answer choices in the efficiency theme, r (699) = -.37, p

< .001. Endorsement of these two themes was also corre-

lated, r (699) = .20, p < .001. In other words, respondents

who reported less belief in pre-made sets’ accuracy and

less concern about the ease of generating flashcard sets,

as compared to accessing pre-made sets, used pre-made

flashcard sets less frequently.

Rates of self-testing with digital versus paper

flashcards

To examine whether students engage in similar habits

when using digital and paper flashcards, we correlated

participants’ percentage of study time self-testing using

digital flashcards (Table 4, Question 1) with percentage

of time self-testing with paper flashcards (Appendix, Ques-

tion 1). Frequency of self-testing across these two

mediums was significantly correlated such that partici-

pants who reported higher rates of self-testing when

using digital flashcards also reported higher rates of self-

testing when using paper flashcards, r (640) = .53, p

< .001. This finding adds further support to the conclusion

that neither medium encourages greater self-testing than

the other, and that respondents’ study habits remain rela-

tively stable across both paper and digital flashcard use.

Grade point average

Finally, to test for possible relationships between students’

self-reported grade point average (GPA) and their digital

flashcard usage patterns, we also completed three sets

of exploratory correlational analyses. These analyses

were conducted on the 658 participants who provided

such data (those who chose not to report their GPA, did

not have an established GPA yet, or did not use a 4.0

scale were excluded from these analyses). None of the cor-

relations were statistically significant: Participants who

reported using digital flashcards for more types of infor-

mation (Table 2, Question 7) did not report significantly

higher GPAs, p = .35, GPA was not significantly correlated

Figure 2. Comparison of digital and paper flashcard set sizes. Note:

Respondents rated the relative size of digital versus paper flashcard set

on a seven-point scale.

MEMORY 13

with the frequency of using particular methods to put

information on digital fl ashcards (Table 3, Question 1),

ps ≥ .16, and GPA was not significantly correlated with

greater use of self-made (or pre-made) digital flashcards

(Table 3, Question 4), p = .64. Hence, digital flashcard

usage patterns do not appear to be predictive of academic

performance, at least as indexed by GPA. It should be

noted, however, that GPA can be affected by factors unre-

lated to digital flashcard use, including the type and

difficulty of coursework, and that GPA is a less precise

measure of learning than, for example, specific course

grades. In addition, many respondents reported that

they used digital flashcards to study for standardised

tests, which do not have any bearing on GPA.

General discussion

The foregoing survey investigated the use of digital flash-

cards during self-regulated learning among college stu-

dents. A host of interesting insights emerged. First,

digital flashcards are remarkably popular. The vast majority

of students report using them. Second, digital flashcards

are overwhelmingly regarded as being beneficial for study-

ing or learning. However, when it comes to using digital

flashcards to their fullest potential, at least as informed

by evidence-based learning research, our results reveal a

mixed picture. Among today’s college students, although

some usage patterns show greater adherence to learning

science-based principles and practices than others, in

other cases, students tend to use flashcards in highly sub-

optimal ways. We next consider these usage patterns in

light of learning practices that are highlighted in the desir-

able difficulties framework and supported by evidence-

based learning research.

Do digital flashcard usage patterns align with

evidence-based learning practices?

One of the most promising results in our survey was the

finding that college students commonly use digital flash-

cards to engage in retrieval practice. Hence, it appears

that researcher and instructor recommendations to use

flashcards for the purposes of beneficial self-testing are

being heeded. That result is in line with the general popu-

larity of retrieval practice as a learning strategy (e.g.,

Hartwig & Dunlosky, 2012; Pan et al., 2020), although stu-

dents tend to regard self-testing as a method of assessing

one’s own learning as opposed to enhancing it (Pan &

Bjork, 2022). Less promisingly, a sizeable minority of

college students report using digital flashcards to

engage in non-retrieval-based strategies (e.g., rereading)

that have dubious pedagogical value (Callender & McDa-

niel, 2009; Dunlosky et al., 2013).

When using digital flashcards to engage in retrieval

practice, many students do not consistently take advan-

tage of correct answer feedback. Instead, they use a

variety of factors to decide whether or not to do so,

some of which are evidence-based (e.g., the prior

number of correct retrievals; Kornell & Bjork, 2008), and

others of which are not (e.g., retrieval speed and fluency;

Benjamin et al., 1998). That failure to consistently use feed-

back likely deprives students of learning opportunities. It

should be noted, however, that feedback in the case of

successful retrieval from memory may not always be

necessary (Pashler et al., 2005), and retrieval practice is

generally effective at enhancing learning even in the

absence of correct answer feedback (Roediger et al.,

2006). Nevertheless, the benefits of retrieval practice are

often augmented by feedback (Rowland, 2014), and

researcher recommendations to use retrieval practice are

typically accompanied by the advice to use feedback.

Observed usage patterns for digital flashcards are

mixed with respect to spacing. Consistent with the well-

established pattern of cramming for exams (e.g., McIntyre

& Munson, 2008), college students do not often begin

using their digital flashcard sets until close to the exam

date. Such cramming likely deprives students of valuable

time that could have been used to engage in beneficial

spacing between study sessions (Sobel et al., 2011).

Indeed, the vast majority of college students did not

endorse a strategy of “spacing out” the use of digital flash-

cards throughout an academic term, contrary to evidence-

based recommendations to do so. More promisingly, they

tended to endorse the use of starring functions more than

dropping functions, which implies the occurrence of inter-

item spacing during learning sessions. It was also generally

common for students to endorse revisiting individual

flashcards more than once, which can be a further oppor-

tunity for inter-item spacing to occur. It should be noted,

however, that some flashcard programmes allow dropping

items from study after one correct retrieval attempt, which

may inadvertently encourage less e

ffective

learning strat-

egies (see Table 1 of Dunlosky & O’Brien, 2020 for a com-

parison of several digital flashcard programmes).

Another concerning aspect of college students’ digital

flashcard practices is an apparent reliance on pre-made

flashcard sets even given doubts about the accuracy or

quality of those sets. This pattern, which was relatively

widespread, suggests that college students engage in

an ease-accuracy trade-off when deciding to make or

obtain digital flashcards – that is, they are willing to

take the risk that the digital flashcards that they use

are suboptimal in exchange for the convenience of

quickly obtaining and readying them for use. That ease-

accuracy trade-off assumes that pre-made flashcard sets

can be incomplete or inaccurate, and that students’

self-made fl ashcard sets may be of higher quality than

the ones that they can obtain online. Even if those

assumptions are not met, however, a reliance on pre-

made digital flashcards likely deprives students of the

benefits of generating their own content (cf. Bertsch

et al., 2007; Slamecka & Graf, 1978). Recent work has

demonstrated that engaging in generation does indeed

augment the benefits of learning with digital flashcards

14 I. ZUNG ET AL.

(Pan et al., 2022). Interestingly, some of our survey

respondents seemed aware of such potential benefits

(given that the endorsement of statements related to

the benefits of generating flashcards was correlated

with use of self-made flashcards during study).

It is also notable that many college students endorsed

the use of shuffle functions with digital flashcards. Doing

so can be a way to introduce not just inter-item spacing

but also interleaving of items – that is, the intermixing of

different concepts, categories, or other types of materials

during learning (Kang, 2016). The interleaving of closely

related concepts when studying, or interleaved practice,

has shown promise at enhancing learning and memory

in such educationally-relevant domains as mathematics

(e.g., Rohrer, 2012) and physics (e.g., Samani & Pan,

2021). However, the optimal approach with which

materials are interleaved has yet to be fully established

(particularly for such domains as language learning; e.g.,

Pan, Lovelett, et al., 2019 ), and the evidence base in

favour of interleaved practice is less established than

that for the other aforementioned effective learning

strategies.

How do usage patterns compare for digital versus

paper flashcards?

Although college students can use digital flashcards and

paper flashcards in similar ways (see Appendix), our

findings reveal that college students view digital flashcards

as distinct from paper flashcards: Our sample indicated a

clear preference for digital over paper flashcards, citing

the convenience of creating digital flashcards and the

ease of transporting and accessing these digital flashcards

as primary reasons for their preference for digital flash-

cards over paper ones. Indeed, our results imply a shift in

college students’ learning habits away from paper to

digital flashcards. That shift is in line with educational

trends showing an increasing move towards digital modal-

ities (National Center for Education Statistics, 2019), accel-

erated by changes in education due to the COVID-19

pandemic.

With respect to evidence-based learning strategies,

some of the usage patterns for digital flashcards resemble

those reported for paper flashcards (e.g., Wissman et al.,

2012), including the relative popularity of retrieval prac-

tice, the underutilisation of feedback, and frequent cram-

ming before exams. However, perhaps because of the

ease of creation, storage, and portability of digital flash-

cards, college students tend to make larger digital

flashcard sets than paper flashcard sets, which may yield

more opportunities for inter-item spacing than with

paper flashcards. In addition, given the greater accessibil-

ity of pre-made digital flashcards, it seems likely that

student users of digital flashcards engage less frequently

in the productive generation of flashcard content than

with paper flashcards (which are commonly created by

methodically writing down to-be-learned information).

A unique characteristic of digital flashcards, as com-

pared to paper flashcards, is that many platforms include

extra features that may enhance learning. For instance,

nearly one-quarter of college students reported using

digital flashcard platforms that had complete or near

total control over when a flashcard was removed from

further study. Designers of these platforms (e.g., Anki)

seem aware that students do not always make optimal

decisions during self-regulated study, and therefore offer

features (e.g., spaced repetitions) that make such decisions

for them. Hence, the use of such platforms (as compared to

using paper flashcards or entirely self-directed digital

flashcard services) may actually aid students seeking to

improve their learning, and especially for students that

may not have ample support or knowledge about evi-

dence-based learning techniques. Moreover, digital

flashcard algorithms (e.g., Colbran et al., 2018; Edge etal.,

2012)

are not susceptible to overconfidence or influenced

by retrieval fluency, and may therefore, at times, make

more sophisticated learning decisions than students

potentially might make. Similarly, adaptive schedules of

practice (e.g., Mettler et al., 2016) can account for vari-

ations in strength of learning across different items

using, for example, response time in addition to accuracy

to maximise learning based on ongoing performance.

Students could potentially even learn valuable learning

practices from such features, and the customisation

offered by many flashcard services (e.g., altering the

spacing between repetitions) could also provide a means

to explore the effectiveness of different learning strategies

and associated metacognitive judgments.

Alternatively, however, such features may take away

crucial aspects of what makes self-regulated learning

“self-regulated” and encourage students to approach

learning sessions on “autopilot,” resulting in a dependence